Indicating a surge in momentum for the forthcoming boom in the tech industry, Nvidia has foreseen rapid expansion in the already fervent demand for the chips essential for constructing artificial intelligence systems. Nvidia, a prominent player based in Silicon Valley, manufactures graphics processing units (GPUs) known as its products, which play a pivotal role in the creation of the majority of AI systems, including the widely used ChatGPT chatbot. Across the spectrum of tech enterprises, from startups to industry giants, there is a fierce scramble to secure access to these GPUs.

Nvidia has attributed its considerable revenue growth for the second quarter, concluding in July, to intense demand from cloud computing services and other clientele in need of chips to fuel AI systems. This growth amounted to a remarkable 101 percent increase compared to the same period the prior year, resulting in a total revenue of $13.5 billion. Simultaneously, profits experienced an exponential surge, multiplying more than ninefold to reach nearly $6.2 billion.

These financial achievements have surpassed Nvidia’s own predictions made in late May, when their projected revenue of $11 billion for the quarter astounded Wall Street and propelled Nvidia’s market valuation beyond $1 trillion for the first time. This upbeat forecast, coupled with Nvidia’s soaring market cap, has become emblematic of the mounting enthusiasm surrounding AI, a technology that is reshaping numerous computing systems and their programming approaches. The stakes have been elevated significantly as the industry eagerly awaits Nvidia’s stance on chip demand for the ongoing quarter, scheduled to conclude in October.

Anticipating future growth, Nvidia has provided a forecast of third-quarter sales reaching $16 billion, nearly tripling the figures from a year ago and exceeding analysts’ average predictions by approximately $3.7 billion, which were centered around $12.3 billion. As often noted, the financial performance of chip manufacturers serves as a harbinger for the broader tech industry, and Nvidia’s robust results could reignite optimism for tech stocks on Wall Street. While other tech giants like Google and Microsoft are investing substantial amounts in AI development with relatively modest returns, Nvidia has managed to capitalize on this trend.

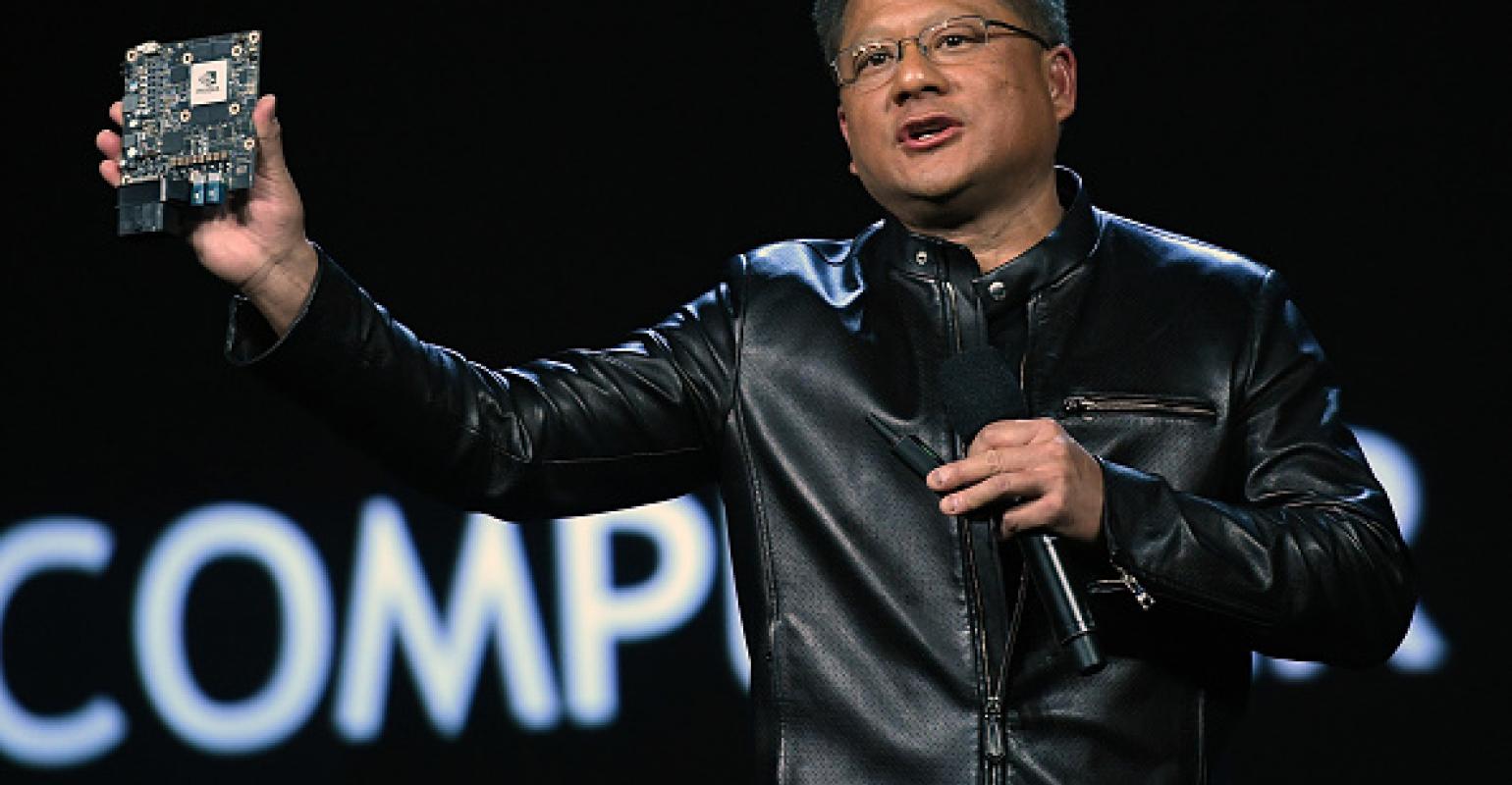

Jensen Huang, the CEO of Nvidia, highlighted that significant investments from major cloud services and corporations are geared towards integrating Nvidia’s AI technology across diverse industries, signifying the dawn of a new computing era. The post-announcement trajectory of Nvidia’s share price demonstrated an increase of over 8 percent in after-hours trading.

Until recently, Nvidia’s primary revenue stream was derived from the sale of GPUs for rendering images in video games. However, the landscape shifted in 2012 when AI researchers began harnessing these GPUs for tasks such as machine learning. Nvidia capitalized on this trend by progressively enhancing its GPUs and accompanying software, thereby streamlining AI programming and usage. Notably, chip sales tailored for data centers, where a significant portion of AI training is conducted, have become Nvidia’s predominant business segment. Revenue from this sector skyrocketed by 171 percent to $10.3 billion during the second quarter.

Patrick Moorhead, an analyst at Moor Insights & Strategy, emphasized that the rush to incorporate generative AI capabilities has become a core imperative for corporate leadership and board members. Presently, Nvidia’s primary challenge lies in its struggle to meet the soaring demand for chips, a void that could potentially pave the way for major chip manufacturers like Intel and Advanced Micro Devices, as well as startups like Groq, to seize opportunities.

Nvidia’s robust sales contrast sharply with the circumstances of some of its peers in the chip industry, who have encountered setbacks due to tepid demand for personal computers and data center servers employed for general-purpose tasks. While Intel reported a 15 percent decline in second-quarter revenue in late July (still surpassing Wall Street expectations), Advanced Micro Devices experienced an 18 percent revenue drop during the same period.

Analysts posit that expenditures on AI-specific hardware, including Nvidia’s chips and systems employing them, may divert funds away from investments in other facets of data center infrastructure. Projections from IDC, a market research firm, indicate a projected 68 percent increase in cloud service spending on server systems for AI over the next five years.

A pronounced appetite has arisen for Nvidia’s latest GPU offering, the H100, specially designed for AI applications. These GPUs commenced shipping in September and have engendered a race among both large corporations and startups to secure their supplies. The fabrication process of these chips is advanced, and their sophisticated packaging marries GPUs with specialized memory chips.

Nvidia’s capacity to scale up the distribution of H100 GPUs is closely intertwined with the actions of Taiwan Semiconductor Manufacturing Company, which handles both the fabrication and packaging of these GPUs. Industry insiders anticipate that the scarcity of H100s will persist until at least 2024, posing a challenge for AI startups and cloud services striving to offer computing services exploiting the capabilities of these new GPUs.

(Source: Don Clark | New York Times)