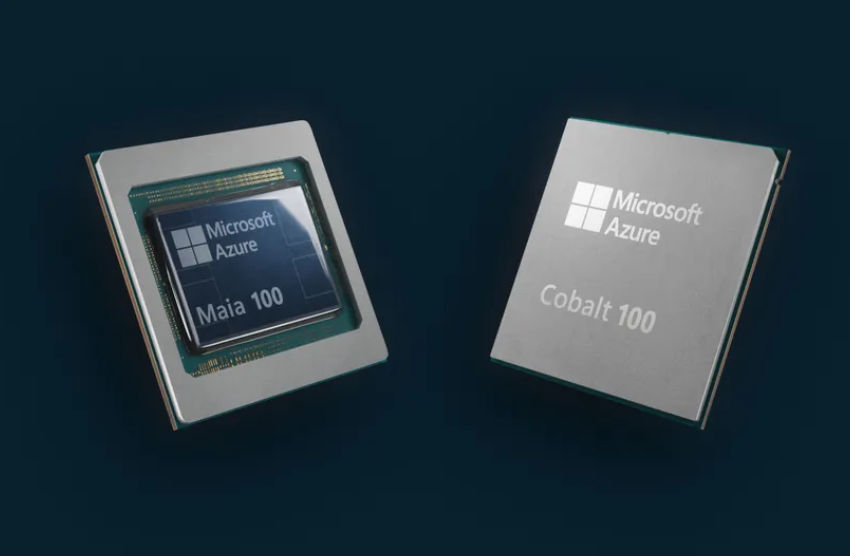

In a groundbreaking move at its annual developer conference, Ignite, Microsoft took the tech world by storm by revealing its highly-anticipated custom cloud computing chip, the Azure Maia 100. This cutting-edge chip, optimized for generative AI tasks, boasts an impressive 105 billion transistors, making it one of the largest chips utilizing the 5-nanometer process technology.

The Azure Maia 100 marks the beginning of a new era for Microsoft, signaling the start of a series of Maia accelerators for AI. Accompanying this revelation is the introduction of Microsoft’s first-ever in-house microprocessor for cloud computing, the Azure Cobalt 100. This 64-bit processor, based on ARM instruction-set architecture, boasts 128 computing cores on die, setting it apart from its predecessors. Microsoft claims the Cobalt 100 achieves a remarkable 40% reduction in power consumption compared to other ARM-based chips used by Azure, setting a new standard for efficiency in cloud computing.

Both the Maia 100 and Cobalt 100 are equipped with 200 gigabit-per-second networking, enabling a formidable 12.5 gigabytes per second of data throughput. These advancements position Microsoft at the forefront of custom silicon development, putting them on par with industry leaders like Google and Amazon.

Microsoft’s foray into custom silicon comes after Google’s Tensor Processing Unit (TPU) in 2016 and Amazon’s Graviton, Trainium, and Inferentia chips. Despite being a late entrant, Microsoft emphasizes continued collaboration with Nvidia and AMD for Azure chips, showcasing a commitment to diversity and innovation in its hardware portfolio. The company plans to integrate Nvidia’s latest “Hopper” GPU chip, the H200, and AMD’s competing GPU, the MI300, in the coming year.

These custom chips are not only designed to power Microsoft’s proprietary programs like Teams and Azure SQL but will also play a pivotal role in driving generative AI from OpenAI, a company in which Microsoft has invested a staggering $11 billion. With exclusive rights to programs such as ChatGPT and GPT-4, Microsoft is aligning its resources to offer unparalleled computational power for OpenAI’s ambitious roadmap.

Microsoft CEO Satya Nadella, speaking at OpenAI’s recent developer conference, pledged to deliver “the best compute” for OpenAI’s projects. The collaboration aims to lure enterprises into adopting generative AI, a strategy that seems to be paying off as Microsoft reports substantial growth in the generative AI business. GitHub Copilot, a flagship product, saw a 40% increase in paying customers in the September quarter alone.

As part of its ongoing commitment to innovation, Microsoft announced the extension of GitHub Copilot to Azure. The public preview of Copilot for Azure promises system administrators an “AI companion” capable of generating deep insights instantly, further solidifying Microsoft’s position as a leader in AI-driven solutions.

In addition to these chip innovations, Microsoft shared news of the general availability of Oracle’s database programs running on Oracle hardware in the US East Azure region. This move positions Microsoft as the sole cloud operator offering Oracle database on Oracle’s infrastructure.

The conference also witnessed the general availability of Microsoft’s edge computing service, Arc, for VMware’s vSphere virtualization suite. This strategic partnership expands Microsoft’s reach in the edge computing market, providing enhanced solutions for businesses leveraging virtualization technologies.

In conclusion, Microsoft’s bold entry into the custom silicon arena with the Azure Maia 100 and Cobalt 100 marks a significant milestone in the evolution of cloud computing and AI. With a focus on collaboration, innovation, and expanding its offerings, Microsoft is well-positioned to shape the future of technology and maintain its competitive edge in the rapidly evolving tech landscape.

(Source: ZD Net | CNBC | The Verge)